Introduction

This project is first and foremost a set of tools aimed to help with step #2 of CIRP incident response, identification. It is not meant to be a complete end-to-end solution, but rather a reference implementation that needs to be adapted to the company's needs.

Effectively it is a set of queries from Snowflake Security

Dashboards wrapped in a Streamlit application. The individual

queries are kept as .sql files and can be used without installing the whole

application.

The Streamlit UI can be deployed as:

- Streamlit application: locally and in Snowflake

- Snowflake native application

- Docker container

See corresponding section for installation instructions. The easiest method to install Sentry is probably "Streamlit in Snowflake through a GitHub action".

Installation

This section contains instructions on installing Sentry in individual Snowflake accounts.

Quick start

The fastest way to deploy Sentry is through Git integration:

-- Optional: set up dedicated role to own the Streamlit app

USE ROLE useradmin;

CREATE OR REPLACE ROLE sentry_sis_role;

GRANT ROLE sentry_sis_role TO ROLE sysadmin;

-- End of role setup

-- Optional: database setup

USE ROLE sysadmin;

CREATE OR REPLACE DATABASE sentry_db;

-- End of database setup

-- Optional: if using a custom warehouse

-- TODO: Drop this when issue #8 is implemented

CREATE OR REPLACE WAREHOUSE sentry WITH

WAREHOUSE_SIZE = XSMALL

INITIALLY_SUSPENDED = TRUE

;

GRANT USAGE ON WAREHOUSE sentry to ROLE sentry_sis_role;

-- End of warehouse setup

USE ROLE ACCOUNTADMIN;

CREATE OR REPLACE API INTEGRATION gh_snowflake_labs

API_PROVIDER = GIT_HTTPS_API

API_ALLOWED_PREFIXES = ('https://github.com/Snowflake-Labs')

ENABLED = TRUE;

USE ROLE sysadmin;

CREATE OR REPLACE GIT REPOSITORY sentry_db.public.sentry_repo

API_INTEGRATION = GH_SNOWFLAKE_LABS

ORIGIN = 'https://github.com/Snowflake-Labs/Sentry/';

-- Optional, if using custom role

GRANT USAGE ON DATABASE sentry_db TO ROLE sentry_sis_role;

GRANT USAGE ON SCHEMA sentry_db.public TO ROLE sentry_sis_role;

GRANT READ ON GIT REPOSITORY sentry_db.public.sentry_repo TO ROLE sentry_sis_role;

GRANT CREATE STREAMLIT ON SCHEMA sentry_db.public TO ROLE sentry_sis_role;

USE ROLE accountadmin;

GRANT IMPORTED PRIVILEGES ON DATABASE SNOWFLAKE TO ROLE sentry_sis_role;

USE ROLE sentry_sis_role;

--

CREATE OR REPLACE STREAMLIT sentry_db.public.sentry

ROOT_LOCATION = '@sentry_db.public.sentry_repo/branches/main/src'

MAIN_FILE = '/Authentication.py'

QUERY_WAREHOUSE = SENTRY; -- Replace the warehouse if needed

-- Share the streamlit app with needed roles

GRANT USAGE ON STREAMLIT sentry_db.public.sentry TO ROLE SYSADMIN;

Streamlit in Snowflake

These instructions will walk you through setting up Sentry as a Streamlit in Snowflake application. This approach is best if you don't want to manage the python runtime environment.

Git integration

To deploy Sentry from a single set of SQL statements:

-- Optional: set up dedicated role to own the Streamlit app

USE ROLE useradmin;

CREATE OR REPLACE ROLE sentry_sis_role;

GRANT ROLE sentry_sis_role TO ROLE sysadmin;

-- End of role setup

-- Optional: database setup

USE ROLE sysadmin;

CREATE OR REPLACE DATABASE sentry_db;

-- End of database setup

-- Optional: if using a custom warehouse

-- TODO: Drop this when issue #8 is implemented

CREATE OR REPLACE WAREHOUSE sentry WITH

WAREHOUSE_SIZE = XSMALL

INITIALLY_SUSPENDED = TRUE

;

GRANT USAGE ON WAREHOUSE sentry to ROLE sentry_sis_role;

-- End of warehouse setup

USE ROLE ACCOUNTADMIN;

CREATE OR REPLACE API INTEGRATION gh_snowflake_labs

API_PROVIDER = GIT_HTTPS_API

API_ALLOWED_PREFIXES = ('https://github.com/Snowflake-Labs')

ENABLED = TRUE;

USE ROLE sysadmin;

CREATE OR REPLACE GIT REPOSITORY sentry_db.public.sentry_repo

API_INTEGRATION = GH_SNOWFLAKE_LABS

ORIGIN = 'https://github.com/Snowflake-Labs/Sentry/';

-- Optional, if using custom role

GRANT USAGE ON DATABASE sentry_db TO ROLE sentry_sis_role;

GRANT USAGE ON SCHEMA sentry_db.public TO ROLE sentry_sis_role;

GRANT READ ON GIT REPOSITORY sentry_db.public.sentry_repo TO ROLE sentry_sis_role;

GRANT CREATE STREAMLIT ON SCHEMA sentry_db.public TO ROLE sentry_sis_role;

USE ROLE accountadmin;

GRANT IMPORTED PRIVILEGES ON DATABASE SNOWFLAKE TO ROLE sentry_sis_role;

USE ROLE sentry_sis_role;

--

CREATE OR REPLACE STREAMLIT sentry_db.public.sentry

ROOT_LOCATION = '@sentry_db.public.sentry_repo/branches/main/src'

MAIN_FILE = '/Authentication.py'

QUERY_WAREHOUSE = SENTRY; -- Replace the warehouse if needed

-- Share the streamlit app with needed roles

GRANT USAGE ON STREAMLIT sentry_db.public.sentry TO ROLE SYSADMIN;

Manual setup

The steps are:

-

Run the following SQL code to set up a dedicated database and role for the application.

The created role will be have

OWNERSHIPprivilege on the application. Feel free to customize these steps if you would like to change the Sentry's runtime environment.

-- This script creates necessary objects to deploy Sentry in a separate database as a user with limited privileges.

USE ROLE useradmin;

-- User and role to deploy the Streamlit app as

CREATE OR REPLACE ROLE sentry_sis_role

;

CREATE OR REPLACE USER sentry_sis_user

DEFAULT_NAMESPACE = sentry_db.public

DEFAULT_ROLE = sentry_sis_role

;

GRANT ROLE sentry_sis_role TO USER sentry_sis_user;

USE ROLE sysadmin;

-- Database

CREATE OR REPLACE DATABASE sentry_db

;

-- Background for these permissions:

-- https://docs.snowflake.com/en/developer-guide/streamlit/owners-rights#about-app-creation

GRANT USAGE ON DATABASE sentry_db TO ROLE sentry_sis_role;

GRANT USAGE ON SCHEMA sentry_db.public TO ROLE sentry_sis_role;

GRANT CREATE STREAMLIT ON SCHEMA sentry_db.public TO ROLE sentry_sis_role;

GRANT CREATE STAGE ON SCHEMA sentry_db.public TO ROLE sentry_sis_role;

-- Warehouse

-- TODO: Drop this when issue #8 is implemented

CREATE OR REPLACE WAREHOUSE sentry WITH

WAREHOUSE_SIZE = XSMALL

INITIALLY_SUSPENDED = TRUE

;

GRANT USAGE ON WAREHOUSE sentry to role sentry_sis_role;

-- Grant access to SNOWFLAKE database

-- For more fine-grained access see:

-- https://docs.snowflake.com/en/sql-reference/account-usage.html#label-enabling-usage-for-other-roles

USE ROLE accountadmin;

GRANT IMPORTED PRIVILEGES ON DATABASE snowflake TO ROLE sentry_sis_role;

-

Set up authentication for the created user

-

Run one of the deployment methods below to send the code into Snowflake and set up

STREAMLITobject

If using nix, this step is (mostly) automated through sis-setUp application.

Github action

This approach may be paired with a CI system that implements additional checks. The deployment is done through a GitHub action.

-

(after running SQL above) Fork/clone the source code repository

-

In the forked repository, open

Settings>Secrets and variables>Actions -

Set up following action secrets:

SIS_OWNER_ROLE– which role should own the Streamlit appSIS_GRANT_TO_ROLE– which role should have access to the Streamlit (e.g.ACCOUNTADMIN)SIS_QUERY_WAREHOUSE– warehouse for running StreamlitSIS_APP_DATABASE– which database should contain the Streamlit applicationSNOWFLAKE_ACCOUNT– which Snowflake account to deploy Streamlit inSNOWFLAKE_USER– user to authenticateSNOWFLAKE_PRIVATE_KEY– private key to authenticate

-

Go to

Actionsand click "Run" onDeploy Streamlit in Snowflake

The steps above will deploy the application and grant USAGE on it to a specified role.

Under the hood the action uses Nix-based application that you can also run on your development machine.

Pushing from local machine using snowcli

Steps in this section will use Snowflake cli to deploy the application. These options are more suitable for a development environment as they involve some very specific tools and require familiarity with command line.

Without Nix

- Install Snowflake cli and configure a connection.

- Clone the source code repository

- Change directory to

src - Adjust

snowflake.ymlto suit your environment - Run

snow streamlit deploy

With Nix

- Have prerequisites:

- Configure snowcli connection. Repository provides

snowas part of the development shell, thus no need to install it - Clone the source code repository

- Go to the cloned directory

- Inspect, adjust

.envrcand.envand allow it (direnv allow) - Run

sis-deploy

Local Streamlit application

These instructions will set up a python environment to run Sentry in.

-

(if

poetryis not installed) Install poetry using steps from official documentation. -

Clone the source code and change current directory to its root

-

Run

poetry installto install all dependencies -

Set up Streamlit secrets.

If using project-specific secrets,

.streamlitdirectory needs to be created in the root of the application.Ensure that

connections.defaultis specified in the secrets file, for example:[connections.default] account = "<accountName>" user = "<userName>" warehouse = "<whName>" role = "<role>" # Any role with access to ACCOUNT_USAGE private_key_file = "<pathToPrivateKeyFile>" -

Run

poetry shellto activate the virtual environment -

Run

streamlit run src/Authentication.py -

Open the URL provided by Streamlit in the terminal (typically

http://localhost:8501)

Docker container

These instructions will set up a Docker container with the application.

-

Clone the source code and change current directory to its root

-

Create a directory

.streamlitin the root of the cloned repository -

Create a file

secrets.tomlinside.streamlitdirectory with contents like:[connections.default] account = "<accountName>" user = "<userName>" password = "<superSecretPassword>" warehouse = "<whName>" role = "<role>" # Any role with access to ACCOUNT_USAGESee more information on the Streamlit secrets here.

-

Build and run the docker image:

$ docker build . -t sentry:latest -f deployment_models/local-docker/Dockerfile ... naming to docker.io/library/sentry:latest $ docker run --rm --mount type=bind,source=$(pwd)/.streamlit,target=/app/.streamlit,readonly --publish-all sentry:latest ... You can now view your Streamlit app in your browser. ...Replace

$(pwd)/.streamlitwith a path to the directory containing Streamlit secrets toml file if using a different secrets location.--publish-allwill assign a random port to the container; you can usedocker psto determine which port is forwarded to8501inside the container. -

(if needed) find out the port that Docker assigned to the container using

docker ps:$ docker ps --format "{{.Image}}\t{{.Ports}}" sentry:latest 0.0.0.0:55000->8501/tcp -

Open

http://localhost:55000in your browser

Native application

It is possible to install Sentry as a native application. Currently it is not available on Snowflake marketplace, but using steps below it's possible to install it in one account and use private listings to share it within the organization.

Deployment steps requires Snowflake cli. While it is possible to

create the application using UI and SQL, snow allows to save quite a bit of

time.

- Install Snowflake cli and configure a connection.

- Clone the source code repository

- Change directory to

deployment_models/native-app - Adjust

snowflake.ymlto suit your environment (see below section for dedicated deployment role) - Run

snow app run

Restricting the deployment role

This SQL allows setting up a role that can only deploy a native application. Using it is optional but recommended.

-- Allows specified role ('sentry_sis_role' in this case) to deploy the application in own account

USE ROLE ACCOUNTADMIN;

GRANT CREATE APPLICATION PACKAGE ON ACCOUNT TO ROLE sentry_sis_role; -- To create the initial package

GRANT CREATE APPLICATION ON ACCOUNT TO ROLE sentry_sis_role; -- To deploy the package

-- Stored procedure allows a non-ACCOUNTADMIN to grant access to SNOWFLAKE database to the application

CREATE DATABASE IF NOT EXISTS srv;

CREATE OR REPLACE SCHEMA srv.sentry_na_deploy;

GRANT USAGE ON DATABASE SRV TO ROLE sentry_sis_role;

GRANT USAGE ON SCHEMA srv.sentry_na_deploy TO ROLE sentry_sis_role;

CREATE OR REPLACE PROCEDURE SUDO_GRANT_IMPORTED_PRIVILEGES(APP_NAME VARCHAR)

RETURNS BOOLEAN

LANGUAGE JAVASCRIPT

STRICT

COMMENT = 'Allows granting access to SNOWFLAKE database to applications as a non-ACCOUNTADMIN user'

EXECUTE AS OWNER

AS

$$

var cmd = "GRANT IMPORTED PRIVILEGES ON DATABASE snowflake TO APPLICATION IDENTIFIER(:1)";

var stmt = snowflake.createStatement(

{

sqlText: cmd,

binds: [APP_NAME]

}

);

var result1 = stmt.execute();

result1.next();

return result1.getColumnValue(1);

$$;

GRANT USAGE ON PROCEDURE SUDO_GRANT_IMPORTED_PRIVILEGES(VARCHAR) TO ROLE sentry_sis_role;

The exact names for objects can be adjusted as needed.

Stored procedures

The Sentry queries can be used without the accompanying Streamlit UI. They can be run from worksheets, notebooks, stored procedures or external systems.

The stored procedures can be deployed using Snowflake git integration using the following steps:

-

Set up API integration:

CREATE OR REPLACE API INTEGRATION sentry_public_github API_PROVIDER=git_https_api API_ALLOWED_PREFIXES=('https://github.com/Snowflake-Labs/Sentry') enabled = true; -

Set up git repository in currently selected database:

CREATE OR REPLACE GIT REPOSITORY sentry_repo api_integration = sentry_public_github origin = "https://github.com/Snowflake-Labs/Sentry";

The individual queries can be run using EXECUTE IMMEDIATE FROM, e.g.:

EXECUTE IMMEDIATE FROM @sentry_repo/branches/main/src/queries/auth_by_method/auth_by_method.sql;

Alternatively, the stored procedures can be created from a single file:

EXECUTE IMMEDIATE FROM @sentry_repo/branches/main/deployment_models/git-repository/create_all.sql;

Usage

Streamlit application

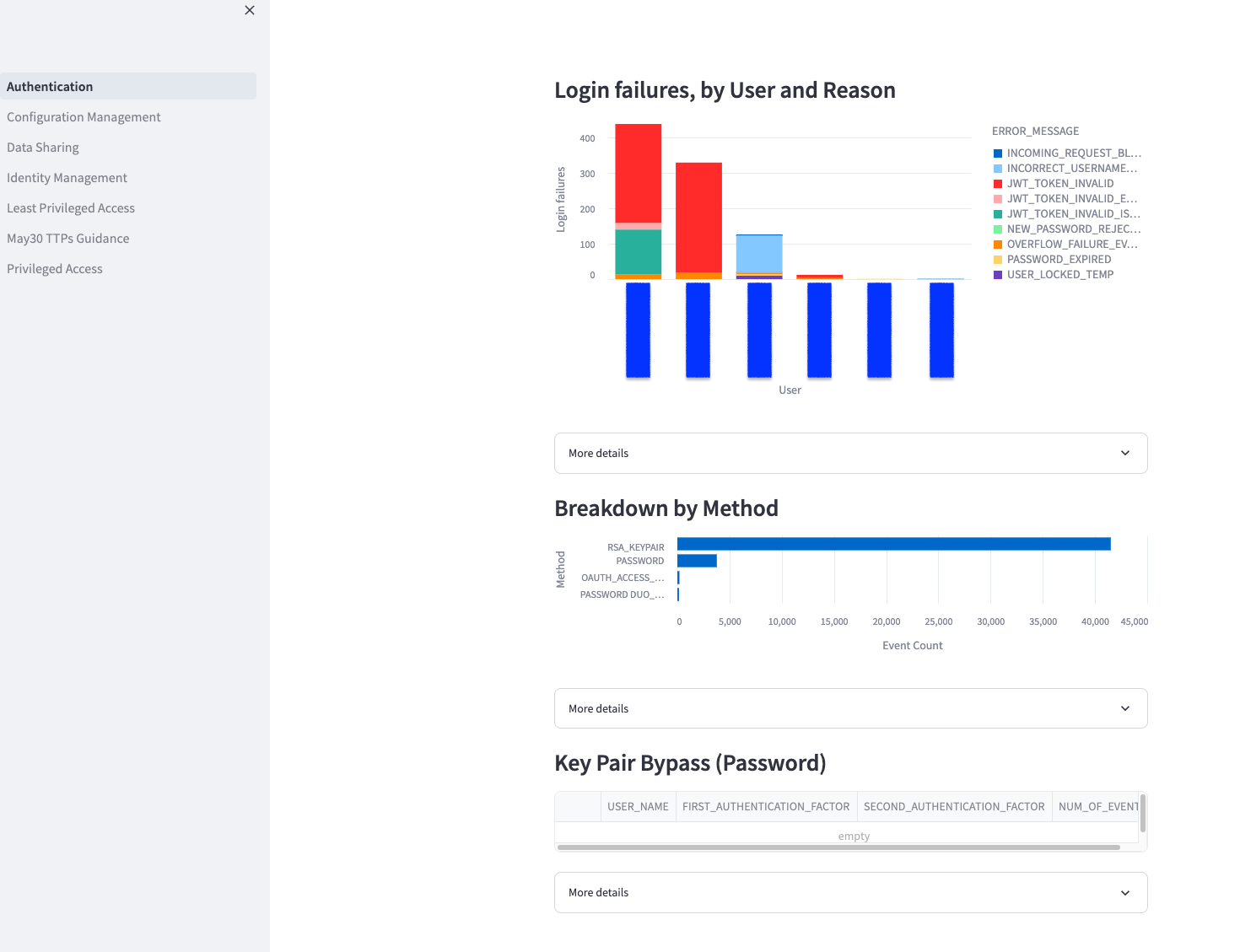

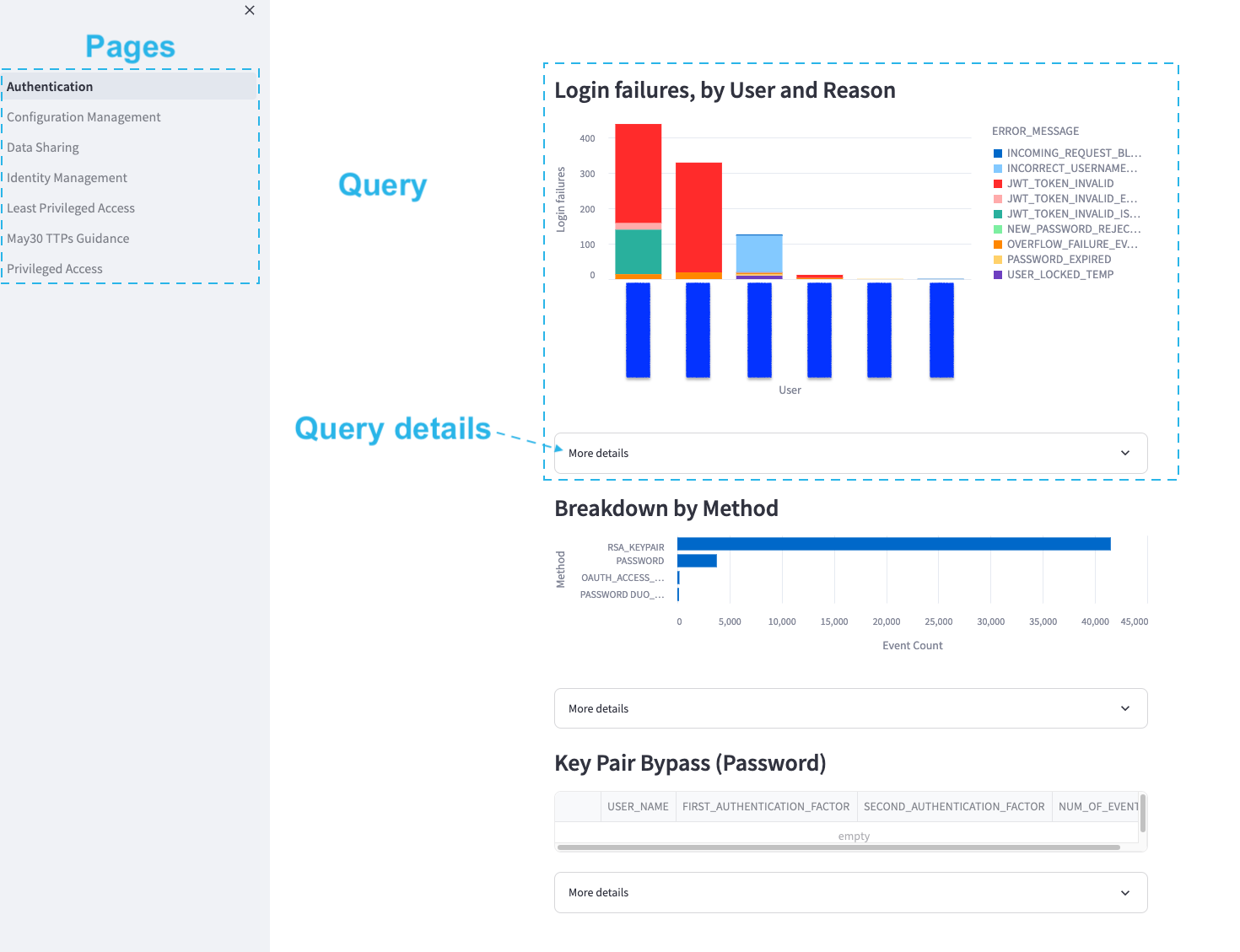

The queries that Sentry provides are separated into Streamlit pages. Each page contains a set of queries with more details on each query collapsed in an expander:

The details typically contain SQL text of the query and in some cases some additional information.

Update

Sentry does not keep any internal state, so all update instructions are essentially "drop and re-create". See the sidebar for update instructions applicable to the installation method.

Streamlit in Snowflake

Depending on the installation method, you may want to use different methods of updating Sentry installed as Streamlit in Snowflake.

Git integration

Application installed through the Git

integration method

can be updated by running ALTER GIT REPOSITORY FETCH

(doc).

To run this command periodically, you may want to CREATE TASK(doc).

Other installation methods

Sentry is stateless, so it can be updated by re-running the installation instructions skipping the initial SQL code.

One difference is that when using snow CLI do deploy the code -- make sure to

call it with --replace flag: snow streamlit deploy --replace.

If you are using a forked repository, you can use "sync fork" functionality on GitHub to propagate changes from main code repository to your fork.

If you have cloned the source code locally and want to retain the local

uncommitted changes, consider using git stash to store the local changes and

git stash pop to apply them.

Local Streamlit application

To update the local Streamlit application:

- Use

git pullto incorporate changes from main source code repository into your local copy - re-run the Streamlit application -- either by restarting

streamlitprocess or by usingRe-runbutton in Streamlit UI.

Docker container

To update Sentry running in a local container:

-

Use

git pullto incorporate changes from main source code repository into your local copy -

Follow the instructions from installation document from

docker buildpoint to re-build the container image

Native application

Updating a Native Application needs to be done on both provider account (where the application package is created) and the consumer side (where the application is installed).

Application provider account

- Use

git pullto incorporate changes from main source code repository into your local copy - Use

snow app deployto propagate changes to the application package - Create an application version and publish it

Application consumer account

Run ALTER APPLICATION <appName> UPGRADE (doc)

Stored procedures

To update the code of stored procedures:

-

Run

ALTER GIT REPOSITORY <name> FETCHto propagate changes (doc) -

If using

create_all.sqlto mass-create the stored procedures, re-run:EXECUTE IMMEDIATE FROM @sentry_repo/branches/main/deployment_models/git-repository/create_all.sql;Otherwise, if running individual queries using

EXECUTE IMMEDIATE, nothing needs to be done since it will automatically use the latest version from the repository.

Queries

ACCOUNTADMIN Grants

All existing and especially new AA grants should be few, rare and well-justified.

CREATE OR REPLACE PROCEDURE SENTRY_accountadmin_grants ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(select

user_name || ' granted the ' || role_name || ' role on ' || end_time as Description, query_text as Statement

from

SNOWFLAKE.ACCOUNT_USAGE.query_history

where

execution_status = 'SUCCESS'

and query_type = 'GRANT'

and query_text ilike '%grant%accountadmin%to%'

order by

end_time desc

);

RETURN TABLE(res);

END

$$

ACCOUNTADMINs that do not use MFA

CREATE OR REPLACE PROCEDURE SENTRY_accountadmin_no_mfa ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(select u.name,

timediff(days, last_success_login, current_timestamp()) || ' days ago' last_login ,

timediff(days, password_last_set_time,current_timestamp(6)) || ' days ago' password_age

from SNOWFLAKE.ACCOUNT_USAGE.users u

join SNOWFLAKE.ACCOUNT_USAGE.grants_to_users g on grantee_name = name and role = 'ACCOUNTADMIN' and g.deleted_on is null

where ext_authn_duo = false and u.deleted_on is null and has_password = true

order by last_success_login desc

);

RETURN TABLE(res);

END

$$

Breakdown by Method

Recommendation: enforce modern authentication via SAML, Key Pair, OAUTH.

CREATE OR REPLACE PROCEDURE SENTRY_auth_by_method ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(select

first_authentication_factor || ' ' ||nvl(second_authentication_factor, '') as authentication_method

, count(*)

from SNOWFLAKE.ACCOUNT_USAGE.login_history

where is_success = 'YES'

and user_name != 'WORKSHEETS_APP_USER'

group by authentication_method

order by count(*) desc

);

RETURN TABLE(res);

END

$$

Key Pair Bypass (Password)

Note: this query would need to be adjusted to reflect the service user naming convention.

CREATE OR REPLACE PROCEDURE SENTRY_auth_bypassing ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(SELECT

l.user_name,

first_authentication_factor,

second_authentication_factor,

count(*) as Num_of_events

FROM SNOWFLAKE.ACCOUNT_USAGE.login_history as l

JOIN SNOWFLAKE.ACCOUNT_USAGE.users u on l.user_name = u.name and l.user_name ilike '%svc' and has_rsa_public_key = 'true'

WHERE is_success = 'YES'

AND first_authentication_factor != 'RSA_KEYPAIR'

GROUP BY l.user_name, first_authentication_factor, second_authentication_factor

ORDER BY count(*) desc

);

RETURN TABLE(res);

END

$$

Average Number of Role Grants per User (~5-10)

CREATE OR REPLACE PROCEDURE SENTRY_avg_number_of_role_grants_per_user ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(with role_grants_per_user (user, role_count) as (

select grantee_name as user, count(*) role_count from SNOWFLAKE.ACCOUNT_USAGE.grants_to_users where deleted_on is null group by grantee_name order by role_count desc)

select round(avg(role_count),1) from role_grants_per_user

);

RETURN TABLE(res);

END

$$

Bloated roles

Roles with largest amount of effective privileges

CREATE OR REPLACE PROCEDURE SENTRY_bloated_roles ()

RETURNS TABLE(ROLE String, NUM_OF_PRIVS Integer)

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(--Role Hierarchy

with role_hier as (

--Extract all Roles

select

grantee_name,

name

from

SNOWFLAKE.ACCOUNT_USAGE.grants_to_roles

where

granted_on = 'ROLE'

and privilege = 'USAGE'

and deleted_on is null

union all

--Adding in dummy records for "root" roles

select

'root',

r.name

from

SNOWFLAKE.ACCOUNT_USAGE.roles r

where

deleted_on is null

and not exists (

select

1

from

SNOWFLAKE.ACCOUNT_USAGE.grants_to_roles gtr

where

gtr.granted_on = 'ROLE'

and gtr.privilege = 'USAGE'

and gtr.name = r.name

and deleted_on is null

)

) --CONNECT BY to create the polyarchy and SYS_CONNECT_BY_PATH to flatten it

,

role_path_pre as(

select

name,

level,

sys_connect_by_path(name, ' -> ') as path

from

role_hier connect by grantee_name = prior name start with grantee_name = 'root'

order by

path

) --Removing leading delimiter separately since there is some issue with how it interacted with sys_connect_by_path

,

role_path as (

select

name,

level,

substr(path, len(' -> ')) as path

from

role_path_pre

) --Joining in privileges from GRANT_TO_ROLES

,

role_path_privs as (

select

path,

rp.name as role_name,

privs.privilege,

granted_on,

privs.name as priv_name,

'Role ' || path || ' has ' || privilege || ' on ' || granted_on || ' ' || privs.name as Description

from

role_path rp

left join SNOWFLAKE.ACCOUNT_USAGE.grants_to_roles privs on rp.name = privs.grantee_name

and privs.granted_on != 'ROLE'

and deleted_on is null

order by

path

) --Aggregate total number of priv's per role, including hierarchy

,

role_path_privs_agg as (

select

trim(split(path, ' -> ') [0]) role,

count(*) num_of_privs

from

role_path_privs

group by

trim(split(path, ' -> ') [0])

order by

count(*) desc

)

select * from role_path_privs_agg order by num_of_privs desc

);

RETURN TABLE(res);

END

$$

Default Role is ACCOUNTADMIN

CREATE OR REPLACE PROCEDURE SENTRY_default_role_check ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(select role, grantee_name, default_role

from SNOWFLAKE.ACCOUNT_USAGE."GRANTS_TO_USERS" join "SNOWFLAKE"."ACCOUNT_USAGE"."USERS"

on users.name = grants_to_users.grantee_name

where role = 'ACCOUNTADMIN'

and grants_to_users.deleted_on is null

and users.deleted_on is null

order by grantee_name

);

RETURN TABLE(res);

END

$$

Grants to PUBLIC role

CREATE OR REPLACE PROCEDURE SENTRY_grants_to_public ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(select user_name, role_name, query_text, end_time

from SNOWFLAKE.ACCOUNT_USAGE.query_history where execution_status = 'SUCCESS'

and query_type = 'GRANT' and

query_text ilike '%to%public%'

order by end_time desc

);

RETURN TABLE(res);

END

$$

Grants to unmanaged schemas outside schema owner

CREATE OR REPLACE PROCEDURE SENTRY_grants_to_unmanaged_schemas_outside_schema_owner ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(select table_catalog,

table_schema,

schema_owner,

privilege,

granted_by,

granted_on,

name,

granted_to,

grantee_name,

grant_option

from SNOWFLAKE.ACCOUNT_USAGE.grants_to_roles gtr

join SNOWFLAKE.ACCOUNT_USAGE.schemata s

on s.catalog_name = gtr.table_catalog

and s.schema_name = gtr.table_schema

where deleted_on is null

and deleted is null

and granted_by not in ('ACCOUNTADMIN', 'SECURITYADMIN') //add other roles with MANAGE GRANTS if applicable

and is_managed_access = 'NO'

and schema_owner <> granted_by

order by

table_catalog,

table_schema

);

RETURN TABLE(res);

END

$$

Least Used Role Grants

CREATE OR REPLACE PROCEDURE SENTRY_least_used_role_grants ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(with least_used_roles (user_name, role_name, last_used, times_used) as

(select user_name, role_name, max(end_time), count(*) from SNOWFLAKE.ACCOUNT_USAGE.query_history group by user_name, role_name order by user_name, role_name)

select grantee_name,

role,

nvl(last_used, (select min(start_time) from SNOWFLAKE.ACCOUNT_USAGE.query_history)) last_used,

nvl(times_used, 0) times_used, datediff(day, created_on, current_timestamp()) || ' days ago' age

from SNOWFLAKE.ACCOUNT_USAGE.grants_to_users

left join least_used_roles on user_name = grantee_name and role = role_name

where deleted_on is null order by last_used, times_used, age desc

);

RETURN TABLE(res);

END

$$

Anomalous Application Access

CREATE OR REPLACE PROCEDURE SENTRY_may30_ttps_guidance_anomalous_application_access ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(SELECT

COUNT(*) AS client_app_count,

PARSE_JSON(client_environment) :APPLICATION :: STRING AS client_application,

PARSE_JSON(client_environment) :OS :: STRING AS client_os,

PARSE_JSON(client_environment) :OS_VERSION :: STRING AS client_os_version

FROM

snowflake.account_usage.sessions sessions

WHERE

1 = 1

AND sessions.created_on >= '2024-04-01'

GROUP BY

ALL

ORDER BY

1 DESC

);

RETURN TABLE(res);

END

$$

Aggregate of client IPs leveraged at authentication for service discovery

CREATE OR REPLACE PROCEDURE SENTRY_may30_ttps_guidance_factor_breakdown ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(select client_ip, user_name, reported_client_type, first_authentication_factor, count(*)

from snowflake.account_usage.login_history

group by client_ip, user_name, reported_client_type, first_authentication_factor

order by count(*) desc

);

RETURN TABLE(res);

END

$$

Monitored IPs logins

Current IOC's are tied to the listed IP's, often leveraging a JDBC driver, and authenticating via a Password stored locally in Snowflake.

CREATE OR REPLACE PROCEDURE SENTRY_may30_ttps_guidance_ip_logins ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(--

SELECT

*

FROM

snowflake.account_usage.login_history

WHERE

client_ip IN (

'102.165.16.161',

'103.108.229.67',

'103.108.231.51',

'103.108.231.67',

'103.125.233.19',

'103.136.147.130',

'103.136.147.4',

'103.214.20.131',

'103.216.220.19',

'103.216.220.35',

'103.75.11.51',

'103.75.11.67',

'104.129.24.115',

'104.129.24.124',

'104.129.41.195',

'104.129.57.67',

'104.223.91.19',

'104.223.91.28',

'107.150.22.3',

'129.227.46.131',

'129.227.46.163',

'138.199.15.147',

'138.199.15.163',

'138.199.21.227',

'138.199.21.240',

'138.199.34.144',

'138.199.43.66',

'138.199.43.79',

'138.199.43.92',

'138.199.6.195',

'138.199.6.208',

'138.199.6.221',

'138.199.60.16',

'138.199.60.29',

'138.199.60.3',

'141.98.252.190',

'142.147.89.226',

'143.244.47.66',

'143.244.47.79',

'143.244.47.92',

'146.70.117.163',

'146.70.117.210',

'146.70.117.35',

'146.70.117.56',

'146.70.119.24',

'146.70.119.35',

'146.70.124.131',

'146.70.124.216',

'146.70.128.195',

'146.70.128.227',

'146.70.129.131',

'146.70.129.99',

'146.70.132.195',

'146.70.132.227',

'146.70.133.3',

'146.70.133.99',

'146.70.134.3',

'146.70.138.195',

'146.70.144.35',

'146.70.165.227',

'146.70.165.3',

'146.70.166.131',

'146.70.166.176',

'146.70.168.195',

'146.70.168.67',

'146.70.171.112',

'146.70.171.131',

'146.70.171.67',

'146.70.171.99',

'146.70.173.131',

'146.70.173.195',

'146.70.184.3',

'146.70.184.67',

'146.70.185.3',

'146.70.187.67',

'146.70.188.131',

'146.70.196.195',

'146.70.197.131',

'146.70.197.195',

'146.70.198.195',

'146.70.199.131',

'146.70.199.195',

'146.70.200.3',

'146.70.211.67',

'146.70.224.3',

'146.70.225.3',

'146.70.225.67',

'149.102.240.67',

'149.102.240.80',

'149.102.246.16',

'149.102.246.3',

'149.22.81.195',

'149.22.81.208',

'149.40.50.113',

'149.88.104.16',

'149.88.20.194',

'149.88.20.207',

'149.88.22.130',

'149.88.22.143',

'149.88.22.156',

'149.88.22.169',

'154.47.16.35',

'154.47.16.48',

'154.47.29.3',

'154.47.30.131',

'154.47.30.137',

'154.47.30.144',

'154.47.30.150',

'156.59.50.195',

'156.59.50.227',

'162.33.177.32',

'169.150.196.16',

'169.150.196.29',

'169.150.196.3',

'169.150.198.67',

'169.150.201.25',

'169.150.201.29',

'169.150.201.3',

'169.150.203.16',

'169.150.203.22',

'169.150.203.29',

'169.150.223.208',

'169.150.227.198',

'169.150.227.211',

'169.150.227.223',

'173.205.85.35',

'173.205.93.3',

'173.44.63.112',

'173.44.63.67',

'176.123.10.35',

'176.123.3.132',

'176.123.6.193',

'176.123.7.143',

'176.220.186.152',

'178.249.209.163',

'178.249.209.176',

'178.249.211.67',

'178.249.211.80',

'178.249.211.93',

'178.249.214.16',

'178.249.214.3',

'178.255.149.166',

'179.43.189.67',

'184.147.100.29',

'185.156.46.144',

'185.156.46.157',

'185.156.46.163',

'185.188.61.196',

'185.188.61.226',

'185.201.188.34',

'185.201.188.4',

'185.204.1.178',

'185.204.1.179',

'185.213.155.241',

'185.248.85.14',

'185.248.85.19',

'185.248.85.34',

'185.248.85.49',

'185.248.85.59',

'185.254.75.14',

'185.65.134.191',

'188.241.176.195',

'192.252.212.60',

'193.138.7.138',

'193.138.7.158',

'193.19.207.196',

'193.19.207.226',

'193.32.126.233',

'194.110.115.3',

'194.110.115.35',

'194.127.167.108',

'194.127.167.88',

'194.127.199.3',

'194.127.199.32',

'194.230.144.126',

'194.230.144.50',

'194.230.145.67',

'194.230.145.76',

'194.230.147.127',

'194.230.148.99',

'194.230.158.107',

'194.230.158.178',

'194.230.160.237',

'194.230.160.5',

'194.36.25.34',

'194.36.25.4',

'194.36.25.49',

'195.160.223.23',

'198.44.129.35',

'198.44.129.67',

'198.44.129.82',

'198.44.129.99',

'198.44.136.195',

'198.44.136.35',

'198.44.136.56',

'198.44.136.67',

'198.44.136.82',

'198.44.136.99',

'198.44.140.195',

'198.54.130.131',

'198.54.130.147',

'198.54.130.153',

'198.54.130.99',

'198.54.131.131',

'198.54.131.152',

'198.54.131.163',

'198.54.133.131',

'198.54.133.163',

'198.54.134.131',

'198.54.134.99',

'198.54.135.131',

'198.54.135.35',

'198.54.135.67',

'198.54.135.99',

'199.116.118.194',

'199.116.118.210',

'199.116.118.233',

'204.152.216.105',

'204.152.216.115',

'204.152.216.99',

'206.217.205.118',

'206.217.205.119',

'206.217.205.125',

'206.217.205.126',

'206.217.205.49',

'206.217.206.108',

'206.217.206.28',

'206.217.206.48',

'206.217.206.68',

'206.217.206.88',

'209.54.101.131',

'31.170.22.16',

'31.171.154.51',

'37.19.200.131',

'37.19.200.144',

'37.19.200.157',

'37.19.210.2',

'37.19.210.21',

'37.19.210.28',

'37.19.210.34',

'37.19.221.144',

'37.19.221.157',

'37.19.221.170',

'38.240.225.37',

'38.240.225.69',

'43.225.189.132',

'43.225.189.163',

'45.134.140.131',

'45.134.140.144',

'45.134.142.194',

'45.134.142.200',

'45.134.142.207',

'45.134.142.220',

'45.134.212.80',

'45.134.212.93',

'45.134.213.195',

'45.134.79.68',

'45.134.79.98',

'45.155.91.99',

'45.86.221.146',

'46.19.136.227',

'5.47.87.202',

'66.115.189.160',

'66.115.189.190',

'66.115.189.200',

'66.115.189.210',

'66.115.189.247',

'66.63.167.147',

'66.63.167.163',

'66.63.167.195',

'68.235.44.195',

'68.235.44.3',

'68.235.44.35',

'69.4.234.116',

'69.4.234.118',

'69.4.234.119',

'69.4.234.120',

'69.4.234.122',

'69.4.234.124',

'69.4.234.125',

'79.127.217.35',

'79.127.217.44',

'79.127.217.48',

'79.127.222.195',

'79.127.222.208',

'87.249.134.11',

'87.249.134.15',

'87.249.134.2',

'87.249.134.28',

'92.60.40.210',

'92.60.40.225',

'93.115.0.49',

'96.44.189.99',

'96.44.191.131',

'96.44.191.140',

'96.44.191.147'

)

ORDER BY

event_timestamp

);

RETURN TABLE(res);

END

$$

Authentication patterns ordered by timestamp

CREATE OR REPLACE PROCEDURE SENTRY_may30_ttps_guidance_ips_with_factor ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(-- NOTE: IP list is sorted

select event_timestamp, event_type, user_name, client_ip, reported_client_type, first_authentication_factor, second_authentication_factor, is_success

from snowflake.account_usage.login_history

where first_authentication_factor='PASSWORD'

and client_ip in (

'102.165.16.161',

'103.108.229.67',

'103.108.231.51',

'103.108.231.67',

'103.125.233.19',

'103.136.147.130',

'103.136.147.4',

'103.214.20.131',

'103.216.220.19',

'103.216.220.35',

'103.75.11.51',

'103.75.11.67',

'104.129.24.115',

'104.129.24.124',

'104.129.41.195',

'104.129.57.67',

'104.223.91.19',

'104.223.91.28',

'107.150.22.3',

'129.227.46.131',

'129.227.46.163',

'138.199.15.147',

'138.199.15.163',

'138.199.21.227',

'138.199.21.240',

'138.199.34.144',

'138.199.43.66',

'138.199.43.79',

'138.199.43.92',

'138.199.6.195',

'138.199.6.208',

'138.199.6.221',

'138.199.60.16',

'138.199.60.29',

'138.199.60.3',

'141.98.252.190',

'142.147.89.226',

'143.244.47.66',

'143.244.47.79',

'143.244.47.92',

'146.70.117.163',

'146.70.117.210',

'146.70.117.35',

'146.70.117.56',

'146.70.119.24',

'146.70.119.35',

'146.70.124.131',

'146.70.124.216',

'146.70.128.195',

'146.70.128.227',

'146.70.129.131',

'146.70.129.99',

'146.70.132.195',

'146.70.132.227',

'146.70.133.3',

'146.70.133.99',

'146.70.134.3',

'146.70.138.195',

'146.70.144.35',

'146.70.165.227',

'146.70.165.3',

'146.70.166.131',

'146.70.166.176',

'146.70.168.195',

'146.70.168.67',

'146.70.171.112',

'146.70.171.131',

'146.70.171.67',

'146.70.171.99',

'146.70.173.131',

'146.70.173.195',

'146.70.184.3',

'146.70.184.67',

'146.70.185.3',

'146.70.187.67',

'146.70.188.131',

'146.70.196.195',

'146.70.197.131',

'146.70.197.195',

'146.70.198.195',

'146.70.199.131',

'146.70.199.195',

'146.70.200.3',

'146.70.211.67',

'146.70.224.3',

'146.70.225.3',

'146.70.225.67',

'149.102.240.67',

'149.102.240.80',

'149.102.246.16',

'149.102.246.3',

'149.22.81.195',

'149.22.81.208',

'149.40.50.113',

'149.88.104.16',

'149.88.20.194',

'149.88.20.207',

'149.88.22.130',

'149.88.22.143',

'149.88.22.156',

'149.88.22.169',

'154.47.16.35',

'154.47.16.48',

'154.47.29.3',

'154.47.30.131',

'154.47.30.137',

'154.47.30.144',

'154.47.30.150',

'156.59.50.195',

'156.59.50.227',

'162.33.177.32',

'169.150.196.16',

'169.150.196.29',

'169.150.196.3',

'169.150.198.67',

'169.150.201.25',

'169.150.201.29',

'169.150.201.3',

'169.150.203.16',

'169.150.203.22',

'169.150.203.29',

'169.150.223.208',

'169.150.227.198',

'169.150.227.211',

'169.150.227.223',

'173.205.85.35',

'173.205.93.3',

'173.44.63.112',

'173.44.63.67',

'176.123.10.35',

'176.123.3.132',

'176.123.6.193',

'176.123.7.143',

'176.220.186.152',

'178.249.209.163',

'178.249.209.176',

'178.249.211.67',

'178.249.211.80',

'178.249.211.93',

'178.249.214.16',

'178.249.214.3',

'178.255.149.166',

'179.43.189.67',

'184.147.100.29',

'185.156.46.144',

'185.156.46.157',

'185.156.46.163',

'185.188.61.196',

'185.188.61.226',

'185.201.188.34',

'185.201.188.4',

'185.204.1.178',

'185.204.1.179',

'185.213.155.241',

'185.248.85.14',

'185.248.85.19',

'185.248.85.34',

'185.248.85.49',

'185.248.85.59',

'185.254.75.14',

'185.65.134.191',

'188.241.176.195',

'192.252.212.60',

'193.138.7.138',

'193.138.7.158',

'193.19.207.196',

'193.19.207.226',

'193.32.126.233',

'194.110.115.3',

'194.110.115.35',

'194.127.167.108',

'194.127.167.88',

'194.127.199.3',

'194.127.199.32',

'194.230.144.126',

'194.230.144.50',

'194.230.145.67',

'194.230.145.76',

'194.230.147.127',

'194.230.148.99',

'194.230.158.107',

'194.230.158.178',

'194.230.160.237',

'194.230.160.5',

'194.36.25.34',

'194.36.25.4',

'194.36.25.49',

'195.160.223.23',

'198.44.129.35',

'198.44.129.67',

'198.44.129.82',

'198.44.129.99',

'198.44.136.195',

'198.44.136.35',

'198.44.136.56',

'198.44.136.67',

'198.44.136.82',

'198.44.136.99',

'198.44.140.195',

'198.54.130.131',

'198.54.130.147',

'198.54.130.153',

'198.54.130.99',

'198.54.131.131',

'198.54.131.152',

'198.54.131.163',

'198.54.133.131',

'198.54.133.163',

'198.54.134.131',

'198.54.134.99',

'198.54.135.131',

'198.54.135.35',

'198.54.135.67',

'198.54.135.99',

'199.116.118.194',

'199.116.118.210',

'199.116.118.233',

'204.152.216.105',

'204.152.216.115',

'204.152.216.99',

'206.217.205.118',

'206.217.205.119',

'206.217.205.125',

'206.217.205.126',

'206.217.205.49',

'206.217.206.108',

'206.217.206.28',

'206.217.206.48',

'206.217.206.68',

'206.217.206.88',

'209.54.101.131',

'31.170.22.16',

'31.171.154.51',

'37.19.200.131',

'37.19.200.144',

'37.19.200.157',

'37.19.210.2',

'37.19.210.21',

'37.19.210.28',

'37.19.210.34',

'37.19.221.144',

'37.19.221.157',

'37.19.221.170',

'38.240.225.37',

'38.240.225.69',

'43.225.189.132',

'43.225.189.163',

'45.134.140.131',

'45.134.140.144',

'45.134.142.194',

'45.134.142.200',

'45.134.142.207',

'45.134.142.220',

'45.134.212.80',

'45.134.212.93',

'45.134.213.195',

'45.134.79.68',

'45.134.79.98',

'45.155.91.99',

'45.86.221.146',

'46.19.136.227',

'5.47.87.202',

'66.115.189.160',

'66.115.189.190',

'66.115.189.200',

'66.115.189.210',

'66.115.189.247',

'66.63.167.147',

'66.63.167.163',

'66.63.167.195',

'68.235.44.195',

'68.235.44.3',

'68.235.44.35',

'69.4.234.116',

'69.4.234.118',

'69.4.234.119',

'69.4.234.120',

'69.4.234.122',

'69.4.234.124',

'69.4.234.125',

'79.127.217.35',

'79.127.217.44',

'79.127.217.48',

'79.127.222.195',

'79.127.222.208',

'87.249.134.11',

'87.249.134.15',

'87.249.134.2',

'87.249.134.28',

'92.60.40.210',

'92.60.40.225',

'93.115.0.49',

'96.44.189.99',

'96.44.191.131',

'96.44.191.140',

'96.44.191.147'

)

order by event_timestamp desc

);

RETURN TABLE(res);

END

$$

Monitored query history

CREATE OR REPLACE PROCEDURE SENTRY_may30_ttps_guidance_query_history ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(SELECT

query_text,

user_name,

role_name,

end_time

FROM snowflake.account_usage.query_history

WHERE execution_status = 'SUCCESS'

AND query_type NOT in ('SELECT')

--AND user_name= '<USER>'

AND (query_text ILIKE '%create role%'

OR query_text ILIKE '%manage grants%'

OR query_text ILIKE '%create integration%'

OR query_text ILIKE '%alter integration%'

OR query_text ILIKE '%create share%'

OR query_text ILIKE '%create account%'

OR query_text ILIKE '%monitor usage%'

OR query_text ILIKE '%ownership%'

OR query_text ILIKE '%drop table%'

OR query_text ILIKE '%drop database%'

OR query_text ILIKE '%create stage%'

OR query_text ILIKE '%drop stage%'

OR query_text ILIKE '%alter stage%'

OR query_text ILIKE '%create user%'

OR query_text ILIKE '%alter user%'

OR query_text ILIKE '%drop user%'

OR query_text ILIKE '%create_network_policy%'

OR query_text ILIKE '%alter_network_policy%'

OR query_text ILIKE '%drop_network_policy%'

OR query_text ILIKE '%copy%'

)

ORDER BY end_time desc

);

RETURN TABLE(res);

END

$$

Users with static credentials

Recommendation to remove any static credentials (passwords) stored in Snowflake to mitigate the risk of credential stuffing/ password spray attacks.

CREATE OR REPLACE PROCEDURE SENTRY_may30_ttps_guidance_static_creds ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(select name, has_password, password_last_set_time, disabled, ext_authn_duo

from snowflake.account_usage.users

where has_password = 'true'

and disabled = 'false'

and ext_authn_duo = 'false'

);

RETURN TABLE(res);

END

$$

Most Dangerous User

CREATE OR REPLACE PROCEDURE SENTRY_most_dangerous_person ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(with role_hier as (

--Extract all Roles

select

grantee_name,

name

from

SNOWFLAKE.ACCOUNT_USAGE.grants_to_roles

where

granted_on = 'ROLE'

and privilege = 'USAGE'

and deleted_on is null

union all

--Adding in dummy records for "root" roles

select

'root',

r.name

from

SNOWFLAKE.ACCOUNT_USAGE.roles r

where

deleted_on is null

and not exists (

select

1

from

SNOWFLAKE.ACCOUNT_USAGE.grants_to_roles gtr

where

gtr.granted_on = 'ROLE'

and gtr.privilege = 'USAGE'

and gtr.name = r.name

and deleted_on is null

)

) --CONNECT BY to create the polyarchy and SYS_CONNECT_BY_PATH to flatten it

,

role_path_pre as(

select

name,

level,

sys_connect_by_path(name, ' -> ') as path

from

role_hier connect by grantee_name = prior name start with grantee_name = 'root'

order by

path

) --Removing leading delimiter separately since there is some issue with how it interacted with sys_connect_by_path

,

role_path as (

select

name,

level,

substr(path, len(' -> ')) as path

from

role_path_pre

) --Joining in privileges from GRANT_TO_ROLES

,

role_path_privs as (

select

path,

rp.name as role_name,

privs.privilege,

granted_on,

privs.name as priv_name,

'Role ' || path || ' has ' || privilege || ' on ' || granted_on || ' ' || privs.name as Description

from

role_path rp

left join SNOWFLAKE.ACCOUNT_USAGE.grants_to_roles privs on rp.name = privs.grantee_name

and privs.granted_on != 'ROLE'

and deleted_on is null

order by

path

) --Aggregate total number of priv's per role, including hierarchy

,

role_path_privs_agg as (

select

trim(split(path, ' -> ') [0]) role,

count(*) num_of_privs

from

role_path_privs

group by

trim(split(path, ' -> ') [0])

order by

count(*) desc

) --Most Dangerous Man - final query

select

grantee_name as user,

count(a.role) num_of_roles,

sum(num_of_privs) num_of_privs

from

SNOWFLAKE.ACCOUNT_USAGE.grants_to_users u

join role_path_privs_agg a on a.role = u.role

where

u.deleted_on is null

group by

user

order by

num_of_privs desc

);

RETURN TABLE(res);

END

$$

Network Policy Change Management

CREATE OR REPLACE PROCEDURE SENTRY_network_policy_changes ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(select user_name || ' made the following Network Policy change on ' || end_time || ' [' || query_text || ']' as Events

from SNOWFLAKE.ACCOUNT_USAGE.query_history where execution_status = 'SUCCESS'

and query_type in ('CREATE_NETWORK_POLICY', 'ALTER_NETWORK_POLICY', 'DROP_NETWORK_POLICY')

or (query_text ilike '% set network_policy%' or

query_text ilike '% unset network_policy%')

and query_type != 'SELECT' and query_type != 'UNKNOWN'

order by end_time desc

);

RETURN TABLE(res);

END

$$

Login failures, by User and Reason

CREATE OR REPLACE PROCEDURE SENTRY_num_failures ()

RETURNS TABLE(USER_NAME String, ERROR_MESSAGE String, NUM_OF_FAILURES Integer)

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(select

user_name,

error_message,

count(*) num_of_failures

from

SNOWFLAKE.ACCOUNT_USAGE.login_history

where

is_success = 'NO'

group by

user_name,

error_message

order by

num_of_failures desc

);

RETURN TABLE(res);

END

$$

Privileged Object Management

CREATE OR REPLACE PROCEDURE SENTRY_privileged_object_changes_by_user ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(SELECT

query_text,

user_name,

role_name,

end_time

FROM SNOWFLAKE.ACCOUNT_USAGE.query_history

WHERE execution_status = 'SUCCESS'

AND query_type NOT in ('SELECT')

AND (query_text ILIKE '%create role%'

OR query_text ILIKE '%manage grants%'

OR query_text ILIKE '%create integration%'

OR query_text ILIKE '%create share%'

OR query_text ILIKE '%create account%'

OR query_text ILIKE '%monitor usage%'

OR query_text ILIKE '%ownership%'

OR query_text ILIKE '%drop table%'

OR query_text ILIKE '%drop database%'

OR query_text ILIKE '%create stage%'

OR query_text ILIKE '%drop stage%'

OR query_text ILIKE '%alter stage%'

)

ORDER BY end_time desc

);

RETURN TABLE(res);

END

$$

SCIM Token Lifecycle

CREATE OR REPLACE PROCEDURE SENTRY_scim_token_lifecycle ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(select

user_name as by_whom,

datediff('day', start_time, current_timestamp()) || ' days ago' as created_on,

ADD_MONTHS(start_time, 6) as expires_on,

datediff(

'day',

current_timestamp(),

ADD_MONTHS(end_time, 6)

) as expires_in_days

from

SNOWFLAKE.ACCOUNT_USAGE.query_history

where

execution_status = 'SUCCESS'

and query_text ilike 'select%SYSTEM$GENERATE_SCIM_ACCESS_TOKEN%'

and query_text not ilike 'select%where%SYSTEM$GENERATE_SCIM_ACCESS_TOKEN%'

order by

expires_in_days

);

RETURN TABLE(res);

END

$$

Access Count By Column

CREATE OR REPLACE PROCEDURE SENTRY_sharing_access_count_by_column ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(select

los.value:"objectDomain"::string as object_type,

los.value:"objectName"::string as object_name,

cols.value:"columnName"::string as column_name,

count(distinct lah.query_token) as n_queries,

count(distinct lah.consumer_account_locator) as n_distinct_consumer_accounts

from SNOWFLAKE.DATA_SHARING_USAGE.LISTING_ACCESS_HISTORY as lah

join lateral flatten(input=>lah.application_objects_accessed) as los

join lateral flatten(input=>los.value, path=>'columns') as cols

where true

and los.value:"objectDomain"::string in ('Table', 'View')

and query_date between '2024-03-21' and '2024-03-30'

and los.value:"objectName"::string = 'db1.schema1.sec_view1'

and lah.consumer_account_locator = 'BATC4932'

group by 1,2,3

);

RETURN TABLE(res);

END

$$

Aggregate View of Access Over Time by Consumer

CREATE OR REPLACE PROCEDURE SENTRY_sharing_access_over_time_by_consumer ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(select

lah.exchange_name,

lah.listing_global_name,

lah.share_name,

los.value:"objectName"::string as object_name,

coalesce(los.value:"objectDomain"::string, los.value:"objectDomain"::string) as object_type,

consumer_account_locator,

count(distinct lah.query_token) as n_queries

from SNOWFLAKE.DATA_SHARING_USAGE.LISTING_ACCESS_HISTORY as lah

join lateral flatten(input=>lah.application_objects_accessed) as los

where true

and query_date between '2024-03-21' and '2024-03-30'

group by 1,2,3,4,5,6

order by 1,2,3,4,5,6

);

RETURN TABLE(res);

END

$$

Shares usage statistics

CREATE OR REPLACE PROCEDURE SENTRY_sharing_listing_usage ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(SELECT

listing_name,

listing_display_name,

event_date,

event_type,

SUM(1) AS count_gets_requests

FROM snowflake.data_sharing_usage.listing_events_daily

GROUP BY 1,2,3,4

);

RETURN TABLE(res);

END

$$

Changes to listings

CREATE OR REPLACE PROCEDURE SENTRY_sharing_listings_alter ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(select

user_name || ' altered a listing at ' || end_time as Description, execution_status, query_text as Statement

from

SNOWFLAKE.ACCOUNT_USAGE.query_history

where

query_type = 'ALTER'

and query_text ilike '%alter%listing%'

order by

end_time desc

);

RETURN TABLE(res);

END

$$

Reader account creation

CREATE OR REPLACE PROCEDURE SENTRY_sharing_reader_creation_monitor ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(select

user_name || ' tried to create a managed account at ' || end_time as Description, execution_status, query_text as Statement

from

SNOWFLAKE.ACCOUNT_USAGE.query_history

where

query_type = 'CREATE'

and query_text ilike '%managed%account%'

order by

end_time desc

);

RETURN TABLE(res);

END

$$

Replication usage

CREATE OR REPLACE PROCEDURE SENTRY_sharing_replication_history ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(SELECT

*

FROM

snowflake.account_usage.replication_usage_history

WHERE

TO_DATE(START_TIME) BETWEEN

dateadd(d, -7, current_date) AND current_date

);

RETURN TABLE(res);

END

$$

Changes to shares

CREATE OR REPLACE PROCEDURE SENTRY_sharing_share_alter ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(select

user_name || ' altered a share at ' || end_time as Description, execution_status, query_text as Statement

from

SNOWFLAKE.ACCOUNT_USAGE.query_history

where

query_type = 'ALTER'

and query_text ilike '%alter%share%'

order by

end_time desc

);

RETURN TABLE(res);

END

$$

Table Joins By Consumer

CREATE OR REPLACE PROCEDURE SENTRY_sharing_table_joins_by_consumer ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(with

accesses as (

select distinct

los.value:"objectDomain"::string as object_type,

los.value:"objectName"::string as object_name,

lah.query_token,

lah.consumer_account_locator

from SNOWFLAKE.DATA_SHARING_USAGE.LISTING_ACCESS_HISTORY as lah

join lateral flatten(input=>lah.application_objects_accessed) as los

where true

and los.value:"objectDomain"::string in ('Table', 'View')

and query_date between '2024-03-21' and '2024-03-30'

)

select

a1.object_name as object_name_1,

a2.object_name as object_name_2,

a1.consumer_account_locator as consumer_account_locator,

count(distinct a1.query_token) as n_queries

from accesses as a1

join accesses as a2

on a1.query_token = a2.query_token

and a1.object_name < a2.object_name

group by 1,2,3

);

RETURN TABLE(res);

END

$$

Stale users

CREATE OR REPLACE PROCEDURE SENTRY_stale_users ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(select name, datediff("day", nvl(last_success_login, created_on), current_timestamp()) || ' days ago' Last_Login from SNOWFLAKE.ACCOUNT_USAGE.users

where deleted_on is null

order by datediff("day", nvl(last_success_login, created_on), current_timestamp()) desc

);

RETURN TABLE(res);

END

$$

User to Role Ratio (larger is better)

CREATE OR REPLACE PROCEDURE SENTRY_user_role_ratio ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(select

round(count(*) / (select count(*) from SNOWFLAKE.ACCOUNT_USAGE.roles),1) as ratio

from SNOWFLAKE.ACCOUNT_USAGE.users

);

RETURN TABLE(res);

END

$$

Users by Password Age

CREATE OR REPLACE PROCEDURE SENTRY_users_by_oldest_passwords ()

RETURNS TABLE()

LANGUAGE SQL

AS

$$

DECLARE

res RESULTSET;

BEGIN

res :=(select name, datediff('day', password_last_set_time, current_timestamp()) || ' days ago' as password_last_changed from SNOWFLAKE.ACCOUNT_USAGE.users

where deleted_on is null and

password_last_set_time is not null

order by password_last_set_time

);

RETURN TABLE(res);

END

$$

Control Mapping

| tile_identifier | title | dashboard | security_features_checklist | nist_800_53 | nist_800_171 | hitrust_csf_v9 | mitre_attack_saas |

|---|---|---|---|---|---|---|---|

| AUTH-1 | Login failures, by User and Reason | Authentication | AC-7 | 3.5 | PR.DS-5:G5 | T1110- Brute Force | |

| AUTH-3 | Breakdown by Method | Authentication | A5 | 3.5.2, 3.5.3 | PR.AC-1:G7, G10 | T1550 - Use Alternate Authentication Material, T1556 - Modify Authentication Process | |

| CONFIG-1 | Network Policy Change Management | Configuration | A5 | CM-2 | 3.1.1, 3.4.2 | PR.DS-6:G3 | T1098 - Account Manipulation |

| SECRETS-2 | ACCOUNTADMINs that do not use MFA | Secrets & Privileged Access | A2 | CM-2, 3 | 3.5.2 | PR.MA-1:G3 | |

| SECRETS-3 | Privileged Object Management | Secrets & Privileged Access | A11 | DE.CM-6:G3 | |||

| SECRETS-4 | Key Pair Bypass (Password) | Secrets & Privileged Access | A6 | AC-2(1) | PR.MA-1:G3 | T1550 - Use Alternate Authentication Material | |

| SECRETS-5 | SCIM Token Lifecycle | Secrets & Privileged Access | A2, A3 | CM-3 | PR.IP-11:G1 | ||

| SECRETS-8 | Grants to PUBLIC role | Secrets & Privileged Access | AC-3(1) | PR.AC-4:G3 | T1098 - Account Manipulation | ||

| SECRETS-9 | Default Role is ACCOUNTADMIN | Secrets & Privileged Access | AC-3 | PR.AC-7:G8, PR.AT-2:G2* | |||

| SECRETS-10 | Grants to unmanaged schemas outside schema owner | Secrets & Privileged Access | A13 | AC-3(7) | PR.AC-4:G1 | ||

| SECRETS-13 | Stale users | Secrets & Privileged Access | AC-2(3)a | 3.5.6 | PR.AC-4:G3 | ||

| USER-1 | Most Dangerous User | Users | AC-6 | PR.IP-11:G2 | |||

| USER-3 | Users by Password Age | Users | A7 | AC-2(1) | PR.IP-11:G2 | ||

| ROLES-1 | User to Role Ratio (larger is better) | Roles | PR.AC-4:G1 | ||||

| ROLES-2 | Average Number of Role Grants per User (~5-10) | Roles | PR.AC-4:G1 | ||||

| ROLES-3 | Least Used Role Grants | Roles | PR.AC-4:G1 | ||||

| ROLES-5 | Bloated roles | Roles | PR.AC-4:G1 | ||||

| ROLES-7 | ACCOUNTADMIN Grants | Roles | PR.AC-4:G3 | T1060- Permission Group Discovery, T1078 - Privilege Escalation, T1546 - Event Triggered Escalation, T1098 - Account Manipulation | |||

| SHARING-1 | Reader account creation | Data Sharing | |||||

| SHARING-2 | Changes to shares | Data Sharing | |||||

| SHARING-3 | Changes to listings | Data Sharing | |||||

| SHARING-4 | Shares usage statistics | Data Sharing | |||||

| SHARING-5 | Replication usage | Data Sharing | |||||

| SHARING-6 | Aggregate View of Access Over Time by Consumer | Data Sharing | |||||

| SHARING-7 | Access Count By Column | Data Sharing | |||||

| SHARING-8 | Table Joins By Consumer | Data Sharing | |||||

| MAY30_TTPS_GUIDANCE-1 | Monitored IPs logins | MAY30_TTPS_GUIDANCE | |||||

| MAY30_TTPS_GUIDANCE-2 | Aggregate of client IPs leveraged at authentication for service discovery | MAY30_TTPS_GUIDANCE | |||||

| MAY30_TTPS_GUIDANCE-3 | Authentication patterns ordered by timestamp | MAY30_TTPS_GUIDANCE | |||||

| MAY30_TTPS_GUIDANCE-4 | Users with static credentials | MAY30_TTPS_GUIDANCE | |||||

| MAY30_TTPS_GUIDANCE-5 | Monitored query history | MAY30_TTPS_GUIDANCE | |||||

| MAY30_TTPS_GUIDANCE-10 | Anomalous Application Access | MAY30_TTPS_GUIDANCE |

Log Sources for Security Monitoring

Customers can monitor their Snowflake deployment for potential indicators of compromise by integrating Snowflake log sources with their Security Information and Event Monitoring (SIEM) solution. This guide documents the security identifiers and the Information Schema and Account Usage columns that Snowflake recommends customers monitor. In addition, this publication maps columns to the MITRE ATT&CK SaaS Matrix, an industry framework that helps security analysts implement detection and response controls that align to their organization's incident response procedures.

Security Identifiers and Views

| Security Identifier/View | Columns | Schema Location | Latency | MITRE ATT&CK |

|---|---|---|---|---|

APPLICABLE_ROLES | GRANTEEROLE_NAMEROLE_OWNERIS_GRANTABLE | INFORMATION_SCHEMA | n/a | T1060- Permission Group Discovery T1087 - Account Discovery |

STAGES | STAGE_NAMECREATEDLAST_ALTERED | INFORMATION_SCHEMA | n/a | T1213- Data Collection/ Exfiltration T1074 Data Staged |

USAGE_PRIVILEGES | GRANTORGRANTEEPRIVILEGE_TYPEIS_GRANTABLECREATED | INFORMATION_SCHEMA | n/a | T1078- Privilege Escalation |

OBJECT_PRIVILEGES | GRANTORGRANTEEPRIVILEGE_TYPEIS_GRANTABLECREATED | INFORMATION_SCHEMA | n/a | T1078- Privilege Escalation |

ACCESS_HISTORY | QUERY_IDQUERY_START_TIMEUSER_NAMEDIRECT_OBJECTS_ACCESSEDBASE_OBJECTS_ACCESSSED | ACCOUNT_USAGE | 3 hours | T1078- Valid Accounts |

COPY_HISTORY | All Applicable Columns | ACCOUNT_USAGE | 2 Hours | T1213- Data Collection T1074 - Data Staged |

DATA_TRANSFER_HISTORY | START_TIMEEND_TIMESOURCE_CLOUDSOURCE_REGIONTARGET_CLOUDTARGET_REGION | ACCOUNT_USAGE | 2 Hours | T1213- Data Collection T1074 - Data Staged |

GRANTS_TO_ROLES | CREATED_ONMODIFIED_ONPRIVILEGEGRANTED_ONNAMEGRANTED_TOGRANTEE_NAMEGRANT_OPTIONGRANTED_BYDELETED_ON | ACCOUNT_USAGE | 2 Hours | T1078- Privilege Escalation |

GRANTS_TO_USERS | CREATED_ONDELETED_ONROLEGRANTED_TOGRANTEE_NAMEGRANTED_BY | ACCOUNT_USAGE | 2 hours | T1078- Privilege Escalation |

LOGIN_HISTORY | EVENT_TIMESTAMPEVENT_TYPEUSER_NAMECLIENT_IPREPORTED_CLIENT_TYPEFIRST_AUTHENTICATION_FACTORSECOND_AUTHENTICATION_FACTORIS_SUCCESS | ACCOUNT_USAGE | 2 hours | T1078.004- Cloud Accounts |

MASKING_POLICIES | POLICY_NAMECREATEDLAST_ALTEREDDELETED | ACCOUNT_USAGE | 2 hours | T1080- Taint Shared Content TA0005 - Defense Evasion |

QUERY_HISTORY | All Applicable Columns | ACCOUNT_USAGE | 45 minutes | TA0003 - Persistence TA0003 - Valid Accounts |

ROLES | CREATED_ONDELETED_ONNAME | ACCOUNT_USAGE | 2 hours | TA0003 - Persistence |

ROW_ACCESS_POLICIES | POLICY_NAMECREATEDLAST_ALTEREDDELETED | ACCOUNT_USAGE | 2 hours | T1080- Taint Shared Content TA0005 - Defense Evasion |

SESSIONS | SESSION_IDCREATED_ONUSER_NAMEAUTHENTICATION_METHODLOGIN_EVENT_IDCLIENT_APPLICATION_VERSIONCLIENT_APPLICATION_IDCLIENT_ENVIORNMENTCLIENT_BUILD_IDCLIENT_VERSION | ACCOUNT_USAGE | 3 hours | TA0003 - Persistence T1550 - Use Alternate Authentication Material |

STAGES | STAGE_NAMECREATEDLAST_ALTEREDDELETED | ACCOUNT_USAGE | 2 hours | T1074 - Data Staged |

USERS | All Applicable Columns | ACCOUNT_USAGE | 2 hours | TA0003 - Persistence TA0003 - Valid Accounts |

DATABASES | DATABASE_NAMECREATEDLAST_ALTEREDDELETED | ACCOUNT_USAGE | 2 hours | T1074 - Data Staged |

TABLES | TABLE_OWNERCREATEDLAST_ALTEREDDELETED | ACCOUNT_USAGE | 2 hours | T1074 - Data Staged |

Architecture

This file contains information on the project's architecture decisions and components.

Programming language

Main language is python, intended to be as compatible as possible with Snowpark python conda channel.

The python code is managed through poetry. Poetry was chosen for its support of lock files which provides some reproducibility guarantees in the python ecosystem.

Dependencies

The project dependencies are defined in pyproject.toml and locked in

poetry.lock. The application needs to run in all of the supported

environments:

- Native application (follows conda channel, but has additional considerations

compared to SiS, e.g.

py38only is supported) - Streamlit in Snowflake (follows conda channel)

- Local streamlit (least restrictive)

- Docker container (least restrictive)

The pinned versions are an approximation of the strictest environment (Native apps).

CI/CD

CI/CD is implemented using nix flake. This allows for the GitHub actions to execute the exact same code as a developer would execute on their machine providing strong repeatability and consistency guarantees.

CI

Main CI entry point is nix flake check. It will perform all syntax checks and

code lints. A GitHub action calls nix flake check on commits into the main

branch.

CD

Deployment can be triggered by manually calling corresponding GitHub action and depend on GitHub secrets or secrets passed through environment variables.

The deployment uses snowcli for pushing objects into Snowflake, relying on it for authentication and logic.

GitHub actions are provided in the .github directory.

Project organization

Main source code resides in ./src directory following the src

layout

convention.

Query files organization

The query files are located in ./src/queries in individual directories. This

approach allows the SQL to be both imported into Python and be executed in

Snowpark session and the SQL can be run through EXECUTE IMMEDIATE FROM

Every query directory must have:

- the SQL code in a

.sqlfile. The file contents must be executable in a stored procedure, a worksheet or a stored procedure1 - query metadata in a

README.mdfile

Streamlit code

Main Streamlit entry point is

Authentication.py.

The

individual pages are located in pages/ subdirectory as required by Streamlit

multipage-apps.

The pages structure was chosen to match the original source of the application.

-

some queries, namely ones calling

SHOW GRANTSrequire running the stored procedure withCALLERrights (doc). Streamlit in Snowflake runs with owner's rights, thus such queries cannot currently be a part of this deployment model. ↩

Contributing to Sentry

Upstreaming a code contribution should be done by forking this repository, creating a feature branch and opening a pull request.

When adding a new query or changing an existing one, the documentation needs to be re-generated.

Make sure to check DEVELOPMENT.md for an overview of the architectural decisions of the project.

Setting up development environment

Python

poetry is used to manage the Python environment. Most IDEs can create a

virtual environment from the pyproject.toml. Alternatively, use poetry install --with=dev in the project root to create it.

Pre-commit

The project uses pre-commit configuration embedded into flake.nix as a

separate file linters.nix.

To install it using nix:

nix develop .#pre-commit --command bash -c "exit"

Alternatively, have the pre-commit run ruff and isort. For more up to date

list of checkers, see the linters.nix file. Since the pre-commit is

only a part of the centrally-managed CI, pre-commit-config.yml is ignored by

git.

All checks

The main entry point to all linters is nix flake check. The repository's CI is

configured to run it on commits to the branch

Deploying into a controlled Snowflake account

Repository comes with GitHub actions that manage the deployment. Under the hood

the actions are implemented by nix applications, so can be triggered locally

to the same effect.

Generating documentation

Project uses a combination of mdbook, mdsh and python scripts to generate and publish the documentation.

Building is wrapped in a Nix application "mkMdBook" and deployment to GitHub pages is done by an action.

To preview the documentation locally:

cd docs/mdbook serve

All needed utilities are a part of the development shell.

The process to add an example query to a page in Sentry:

- Create a new directory under

src/queries, for example "demo_query" - Add the query

.sqlfile in the new directory. It should have the same name as the directory ("demo_query.sql") - Add a

README.mdfile for the query with some front matter (see the example patch below) - Add a

Queryobject tosrc/common/queries.py. The argument should be the name of the directory (Query("demo_query")) - Add a

Tileobject to a collection of tiles insrc/common/tiles.py(see the example patch below)

By default a query will be rendered as a table. You can customize the query

display by passing extra render_f argument (see examples in tiles.py).

Sample diff

diff --git a/src/common/queries.py b/src/common/queries.py

index 0976a14..0aeab41 100644

--- a/src/common/queries.py

+++ b/src/common/queries.py

@@ -71,3 +71,5 @@ STATIC_CREDS = Query("may30_ttps_guidance_static_creds")

QUERY_HISTORY = Query("may30_ttps_guidance_query_history")

ANOMALOUS_APPLICATION_ACCESS = Query("may30_ttps_guidance_anomalous_application_access")

+

+DEMO_QUERY = Query("demo_query")

diff --git a/src/common/tiles.py b/src/common/tiles.py

index 73ffa1b..20e2de3 100644

--- a/src/common/tiles.py

+++ b/src/common/tiles.py

@@ -79,6 +79,7 @@ altair_chart = partial(

) # NOTE: theme="streamlit" is default

AuthTiles = _mk_tiles(

+ queries.DEMO_QUERY,

{

"query": NUM_FAILURES,

"render_f": lambda data: altair_chart(

diff --git a/src/queries/demo_query/README.md b/src/queries/demo_query/README.md

new file mode 100644

index 0000000..aeff47f

--- /dev/null

+++ b/src/queries/demo_query/README.md

@@ -0,0 +1,7 @@

+---

+title: A demo query

+Tile Identifier: AUTH-0

+Dashboard: Authentication

+---

+

+Here be dragons

diff --git a/src/queries/demo_query/demo_query.sql b/src/queries/demo_query/demo_query.sql

new file mode 100644

index 0000000..2e3761f

--- /dev/null

+++ b/src/queries/demo_query/demo_query.sql

@@ -0,0 +1 @@

+SELECT 1